Discover how visual data types like thermal imaging, LiDAR, and infrared images enable diverse computer vision applications across industries.

Discover how visual data types like thermal imaging, LiDAR, and infrared images enable diverse computer vision applications across industries.

Technology like drones used to be limited and only accessible to researchers and specialists, but nowadays, cutting-edge hardware is becoming more accessible to a wider audience. This shift is changing the way we collect visual data. With more accessible technology, we can now capture images and videos from a variety of sources, beyond just traditional cameras.

In parallel, image analytics, enabled by computer vision, a branch of artificial intelligence (AI), is rapidly evolving, allowing machines to interpret and process visual data more effectively. This advancement has opened up new possibilities for automation, object detection, and real-time analysis. Machines can now recognize patterns, track movement, and make sense of complex visual inputs.

Some key types of visual data include RGB (Red, Green, Blue) images, which are commonly used for object recognition, thermal imaging, which helps detect heat signatures in low-light conditions, and depth data, which enables machines to understand 3D environments. Each of these data types plays a vital role in powering various applications of Vision AI, ranging from surveillance to medical imaging.

In this article, we’ll explore the key types of visual data used in Vision AI and explore how each contributes to improving accuracy, efficiency, and performance across various industries. Let’s get started!

Typically, when you use a smartphone to take a photo or view CCTV footage, you're working with RGB images. RGB stands for red, green, and blue, and they are the three color channels that represent visual information in digital images.

RGB images and videos are closely related types of visual data used in computer vision, both captured using standard cameras. The key difference is that images capture a single moment, while videos are a sequence of frames that show how things change over time.

RGB images are generally used for computer vision tasks like object detection, instance segmentation, and pose estimation, supported by models like Ultralytics YOLO11. These applications rely on identifying patterns, shapes, or specific features in a single frame.

Videos, on the other hand, are essential when motion or time is a factor, such as for gesture recognition, surveillance, or tracking actions. Since videos can be considered a series of images, computer vision models like YOLO11 process them frame by frame to understand movement and behavior over time.

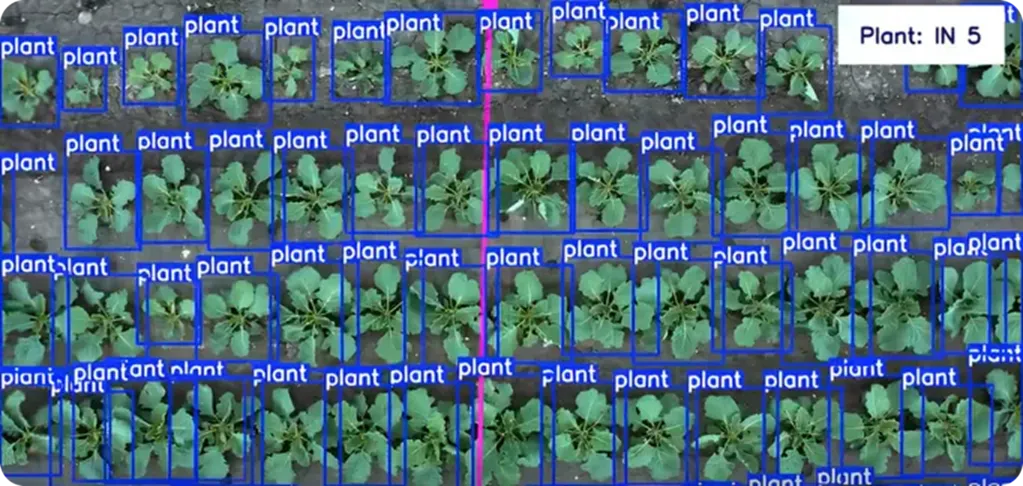

For instance, YOLO11 can be used to analyze RGB images or videos to detect weeds and count plants in agricultural fields. This enhances crop monitoring and helps track changes across growing cycles for more efficient farm management.

Depth data adds a third dimension to visual information by indicating how far objects are from the camera or sensor. Unlike RGB images that only capture color and texture, depth data provides spatial context. It showcases the distance between objects and the camera, making it possible to interpret the 3D layout of a scene.

This type of data is captured using technologies like LiDAR, stereo vision (using two cameras to mimic human depth perception), and Time-of-Flight (measuring the time it takes for light to travel to an object and back) cameras.

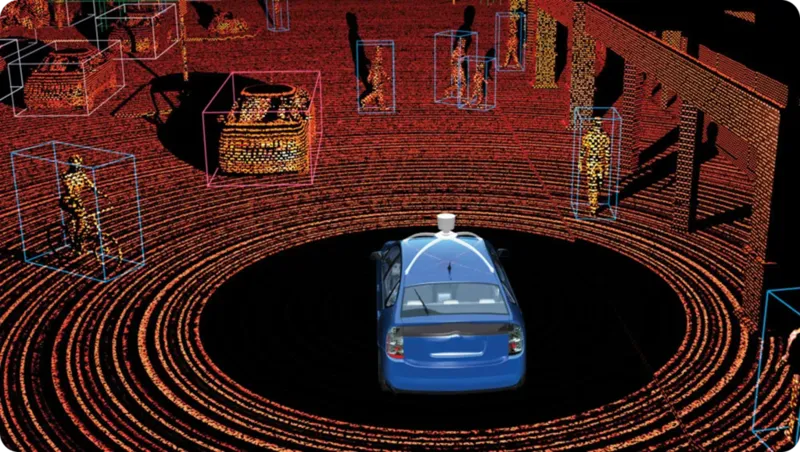

Among these, LiDAR (Light Detection and Ranging) is often the most reliable for depth measurement. It works by sending out rapid laser pulses and measuring how long they take to bounce back. The result is a highly accurate 3D map, known as a point cloud, which highlights the shape, position, and distance of objects in real time.

LiDAR technology can be split into two main types, each designed for specific applications and environments. Here's a closer look at both types:

An impactful application of LiDAR data is in autonomous vehicles, where it plays a key role in tasks such as lane detection, collision avoidance, and identifying nearby objects. LiDAR generates detailed, real-time 3D maps of the environment, enabling the vehicle to see objects, calculate their distance, and navigate safely.

RGB images capture what we see in the visible light spectrum; however, other imaging technologies, like thermal and infrared imaging, go beyond this. Infrared imaging captures infrared light that is emitted or reflected by objects, making it useful in low-light conditions.

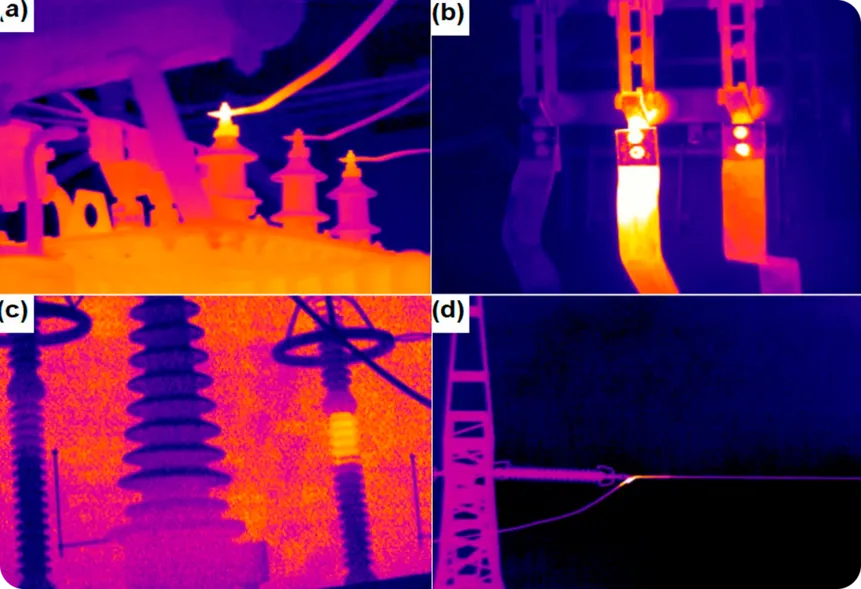

Thermal imaging, in contrast, detects the heat emitted by objects and shows temperature differences, allowing it to work in complete darkness or through smoke, fog, and other obstructions. This type of data is particularly useful for monitoring and detecting issues, especially in industries where temperature changes can signal potential problems.

An interesting example is thermal imaging being used to monitor electrical components for signs of overheating. By detecting temperature differences, thermal cameras can identify issues before they result in equipment failure, fires, or costly damage.

Similarly, infrared images can help detect leaks in pipelines or insulation by identifying temperature differences that indicate escaping gases or fluids, which is crucial for preventing hazardous situations and improving energy efficiency.

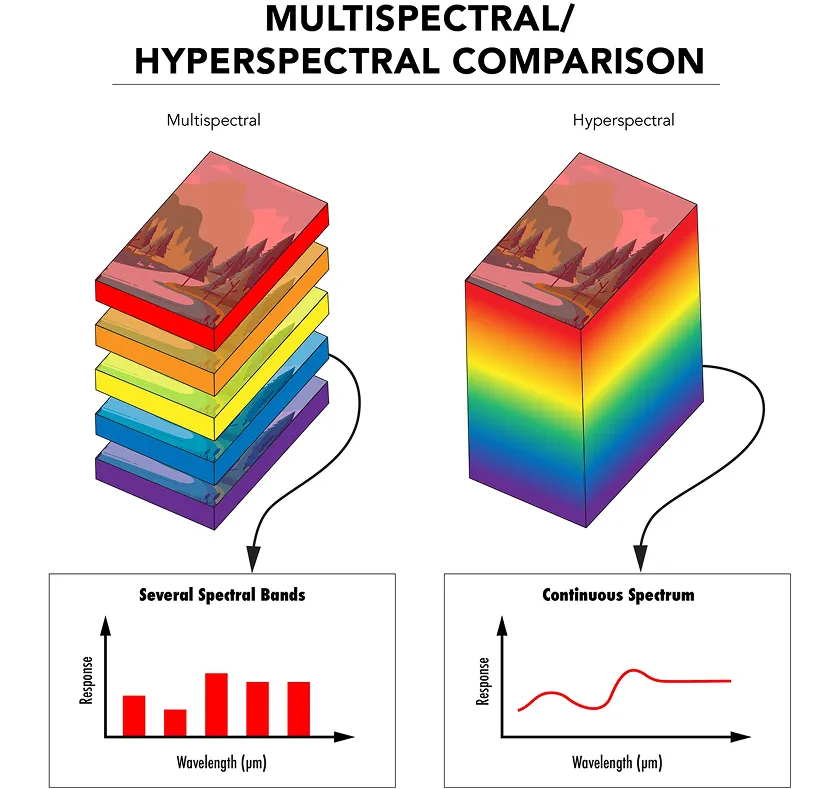

While infrared and thermal imaging capture specific aspects of the electromagnetic spectrum, multispectral imaging collects light from a few selected wavelength ranges, each chosen for a specific purpose, such as detecting healthy vegetation or identifying surface materials.

Hyperspectral imaging takes this a step further by capturing light across hundreds of very narrow and continuous wavelength ranges. This provides a detailed light signature for each pixel in the image, offering a much deeper understanding of any material being observed.

Both multispectral and hyperspectral imaging use special sensors and filters to capture light at different wavelengths. The data is then organized into a 3D structure called a spectral cube, with each layer representing a different wavelength.

AI models can analyze this data to detect features that regular cameras or the human eye can't see. For example, in plant phenotyping, hyperspectral imaging can be used to monitor the health and growth of plants by detecting subtle changes in their leaves or stems, such as nutrient deficiencies or stress. This helps researchers assess plant health and optimize agricultural practices without the need for invasive methods.

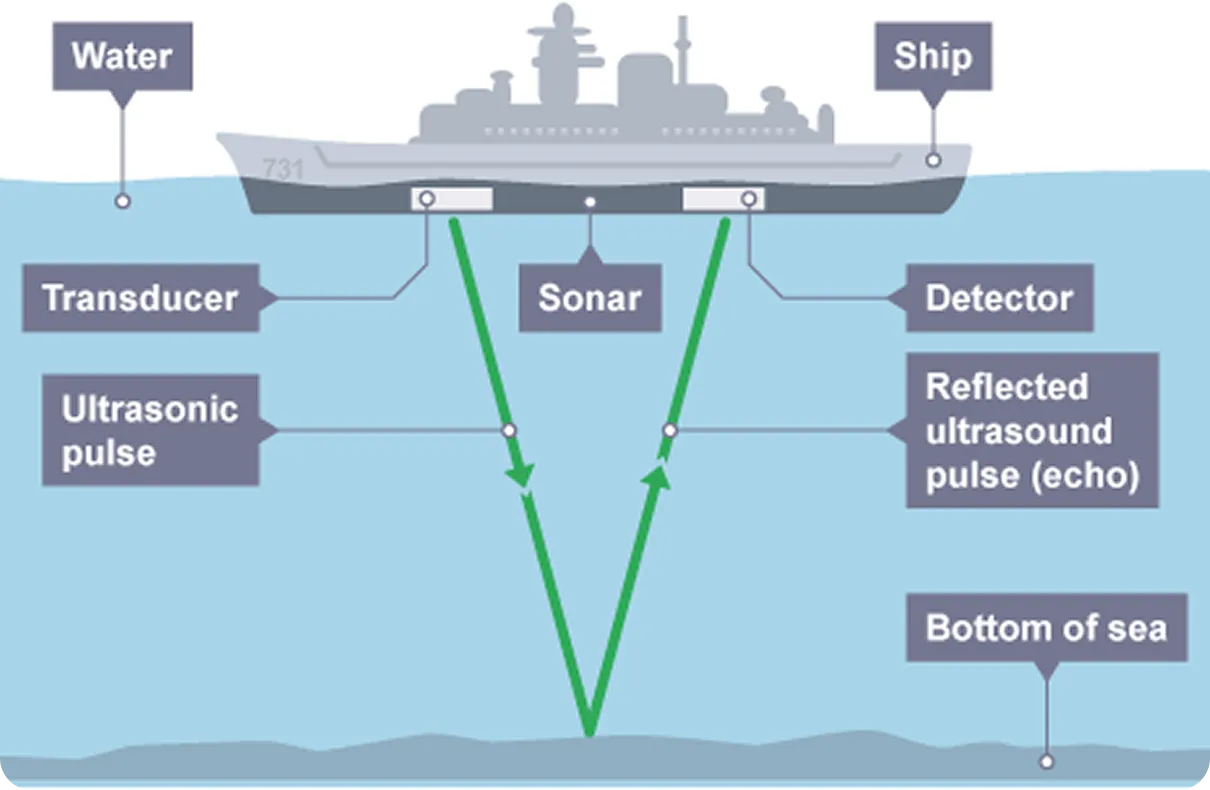

Radar and sonar imaging are technologies that detect and map objects by sending out signals and analyzing their reflections, similar to LiDAR. Unlike RGB imaging, which relies on light waves to capture visual information, radar uses electromagnetic waves, typically radio waves, while sonar uses sound waves. Both radar and sonar systems emit pulses and measure the time it takes for the signal to bounce back from an object, providing information about its distance, size, and speed.

Radar imaging is especially useful when visibility is poor, such as during fog, rain, or nighttime. Because it doesn't rely on light, it can detect aircraft, vehicles, or terrain in complete darkness. This makes radar a reliable choice in aviation, weather monitoring, and autonomous navigation.

In comparison, sonar imaging is commonly used in underwater environments where light cannot reach. It uses sound waves that travel through water and bounce off submerged objects, allowing for the detection of submarines, mapping of ocean floors, and execution of underwater rescue missions. Advances in computer vision are now enabling further enhancement of underwater detection by combining sonar data with intelligent analysis for improved detection and decision-making.

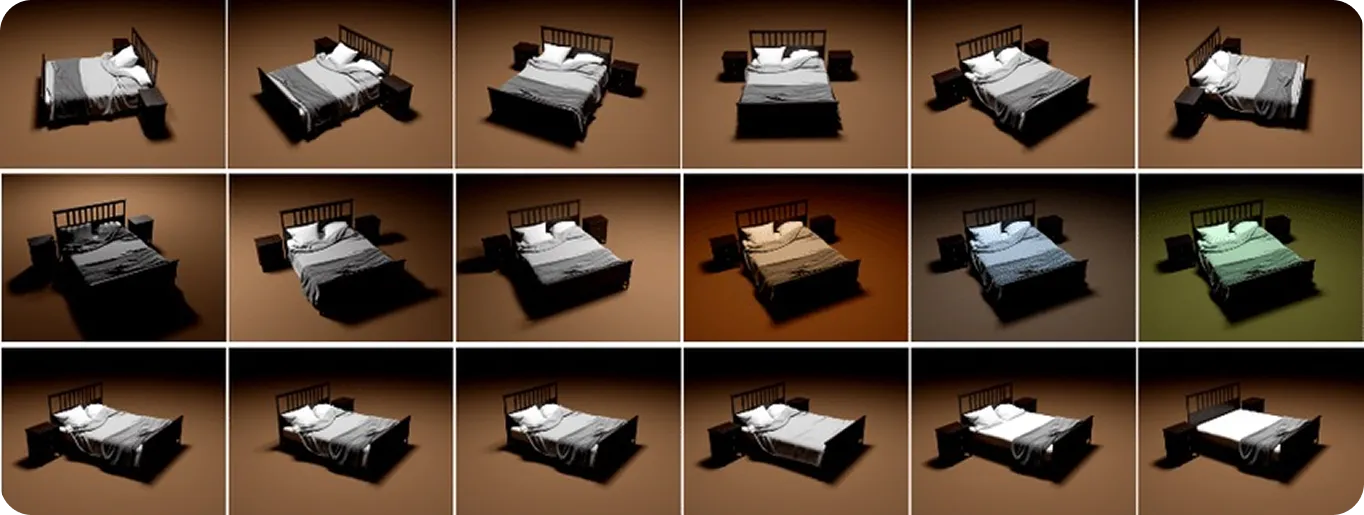

So far, the different types of data we’ve discussed have been those that can be collected from the real world. However, synthetic and simulated visual data are both types of artificial content. Synthetic data is generated from scratch using 3D modeling or generative AI to produce realistic-looking images or videos.

Simulated data is similar but involves creating virtual environments that replicate how the physical world behaves, including light reflection, shadow formation, and object movement. While all simulated visual data is synthetic, not all synthetic data is simulated. The key difference is that simulated data replicates realistic behavior, not just appearance.

These data types are useful for training computer vision models, particularly when real-world data is hard to collect or when specific, rare situations need to be simulated. Developers can create entire scenes, choose object types, positions, and lighting, and automatically add labels like bounding boxes for training. This helps build large, diverse datasets quickly, without the need for real photos or manual labeling, which can be costly and time-consuming.

For example, in healthcare, synthetic data can be used to train models to segment breast cancer cells, where collecting and labeling large datasets of real images is difficult. Synthetic and simulated data provide flexibility and control, filling gaps where real-world visuals are limited.

Now that we’ve looked at how different types of visual data work and what they can do, let’s take a closer look at which data types are best for specific tasks:

Sometimes, a single data type may not provide enough accuracy or context in real-world situations. This is where multimodal sensor fusion becomes key. By combining RGB with other data types like thermal, depth, or LiDAR, systems can overcome individual limitations, improving reliability and adaptability.

For example, in warehouse automation, using RGB for object recognition, depth for distance measurement, and thermal for detecting overheating equipment makes operations more efficient and safer. Ultimately, the best results come from selecting or combining data types based on the specific needs of your application.

When building Vision AI models, choosing the right type of visual data is crucial. Tasks like object detection, segmentation, and motion tracking rely not just on algorithms but also on the quality of the input data. Clean, diverse, and accurate datasets help reduce noise and enhance performance.

By combining data types like RGB, depth, thermal, and LiDAR, AI systems gain a more complete view of the environment, making them more reliable in various conditions. As technology continues to improve, it will likely pave the way for Vision AI to become faster, more adaptable, and more impactful across industries.

Join our community and explore our GitHub repository to learn more about computer vision. Discover various applications related to AI in healthcare and computer vision in retail on our solutions pages. Check out our licensing options to get started with Vision AI.